The End of Visual Truth?

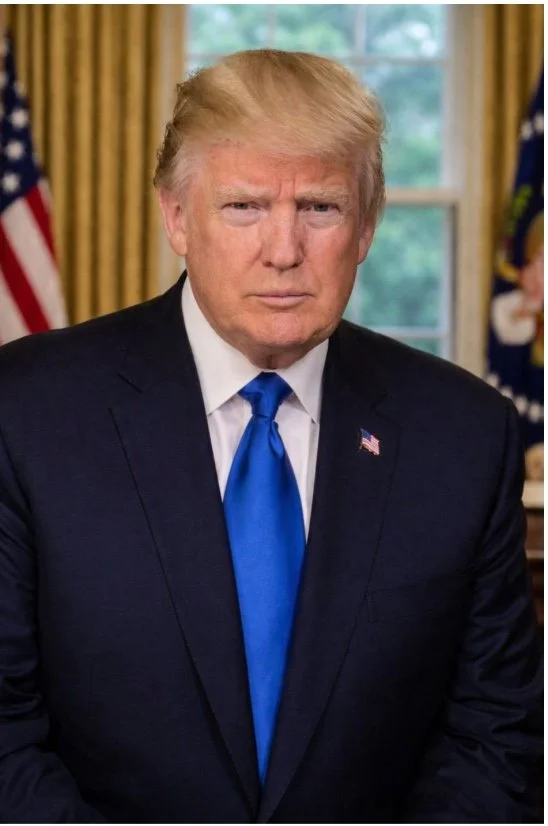

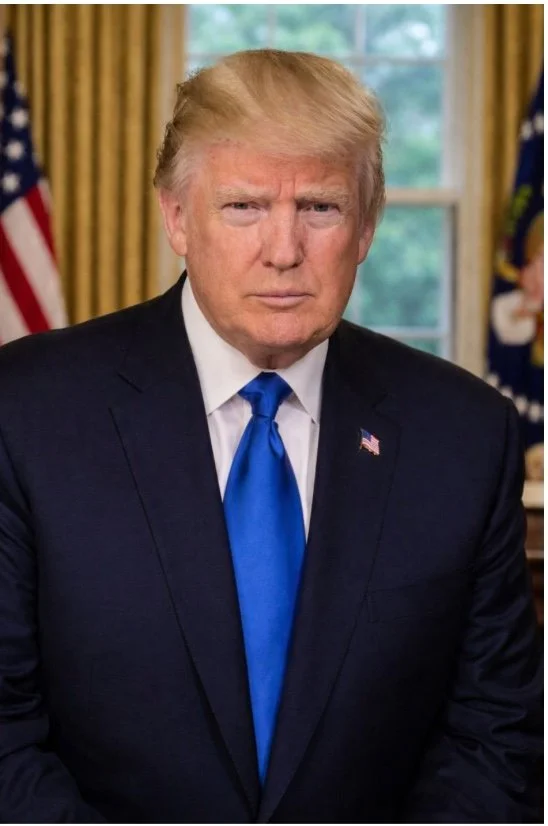

Can we still tell the difference between real and A.I.-generated images? We decided to put this to the test, conducting a survey where we showed participants various real or A.I.-generated images and asked for their verdict. Before we show you the results, why don’t you have a go yourself with these images below. Don’t scroll too far! The answers and results of the survey will be at the bottom of the article.

Did you guess correctly? Here are the answers:

Real

Real

AI

AI

Real

AI

AI (blue tie)

Real (red tie)

Survey Results:

Result: 54% AI, 46% Real

Answer: Real

Result: 63% AI, 37% Real

Answer: AI

Result: 37% AI, 63% Real

Answer: AI

Result: 37% AI, 63% Real

Answer: Real

Result: 23% AI, 77% Real

Answer: Real

Result: 57% AI, 43% Real

Answer: Real

Result: 86% AI, 14% Real

Answer: AI

Result: 54% AI, 46% Real

Answer: AI

So how can we tell which images are A.I. generated ? Here are some common attributes:

Blurred, “clean” background

A dream-like haze

Inconsistent shadows

Details that look too sharp

Overly smooth or airbrushed skin

Images that are ˚too perfect’ - AIs forget that reality is flawed.

Text – AI is very bad at making images that contain text, so look out for jumbled letters.

Hands – this is not as common anymore as AIs keep improving, but still check if something looks off about hands or if a person is hiding their hands in the image

Weird arrangements – Can you see any details that do not make sense or do not seem to have a purpose? Perhaps a door to nowhere, a screw holding nothing in place?

In videos, ask yourself if the behaviour of the person makes sense.

Even if you employ these rules of thumb, as AI gets increasingly more competent at generating realistic photos and prompt engineering to create these realistic images become more mainstream, certain dangers will inevitably arise. The most common problem we see today is deepfakes, which opens the door to a new class of scams, in which fabricated images are used to establish false credibility, manufacture evidence, or emotionally manipulate victims. Deepfakes have also been used for pornography, famously Grok, which has now banned users from undressing images of real people.

Beyond this, when images can no longer be reliably traced to a real event, photographic evidence loses its authority, making fake news harder to refute and unreliable sources harder to distinguish from legitimate ones.

AI image generation also presents a huge data issue: many A.I. Image models are trained on vast datasets scraped from the internet, absorbing metadata, stylistic patterns, and even identifiable features from real photographs. As a result, models can generate images of real people without their knowledge or consent.

There is finally the quieter but no less troubling issue of bias. AIs do not generate their images neutrally, reproducing and amplifying the values embedded in their training data. When prompted to create an image of a “beautiful woman,” (see below) for instance, the result often reflects narrow, culturally loaded standards: youth, thinness, flawless skin, long hair, Eurocentric features. In this way, A.I.-generated images risk not only deceiving us about what is real, but subtly reshaping our sense of what is normal, desirable, or true.